Your agent has a Karate Kid problem

The hard part of building AI agents isn't the tech.

It's admitting no one knows what your experts actually do all day.

Before robots can fold laundry, someone has to teach them how humans fold laundry. Before AI agents can handle your customer escalations, someone has to teach them how your best CSR handles escalations.

Same problem.

Different machines.

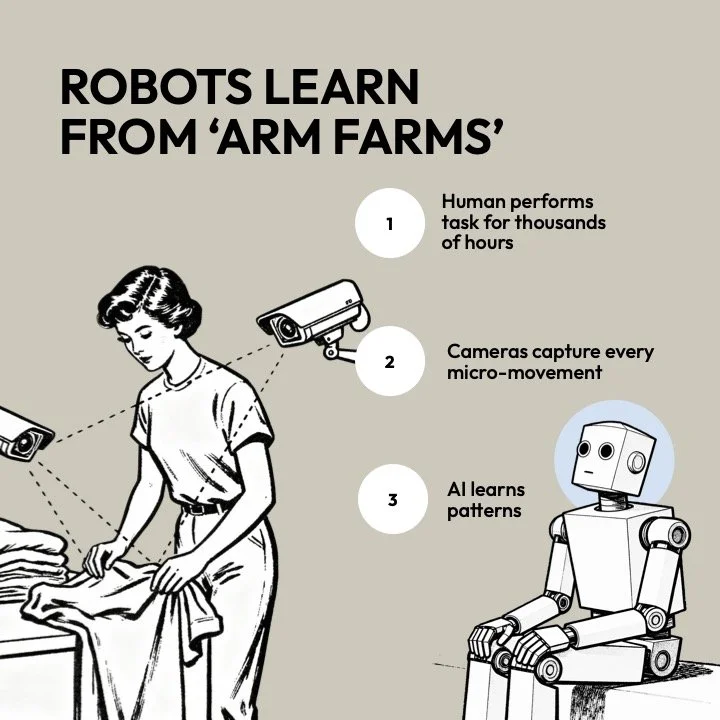

For training robots, they call these "arm farms"—facilities in places like India where workers repeat tasks while cameras capture every movement. Not to build robots. Just to translate human expertise into machine-readable patterns.

Agents have the same Karate Kid problem:

Mr. Miyagi can't just tell Daniel how to block a punch. He has to make him wax the car 10,000 times until the motion becomes instinct. Only then can he explain what Daniel's been learning.

Companies like Figure AI are spending millions capturing footage from 100,000 homes because you can't automate work you don't understand. Early humanoid deployments stay in "closed environments where traffic is limited and predictable" for exactly this reason.

Your AI agents need the same thing.

The bottleneck isn't the technology.

It's understanding your work well enough to teach it.

Not the process map—the real process.

Sarah knows approvals come Thursdays so she pings at noon if nothing's hit her inbox. Mike can tell from an email's tone whether to loop in Legal now or wait a week.

Most companies skip this step.

They point AI at documented processes that nobody actually follows.

Then wonder why the agent keeps making rookie mistakes.

The companies winning at AI?

They do their arm farm homework first.

They watch their experts work.

Capture the invisible decision points.

Understand what five years of experience looks like in motion.

The robots aren't replacing those workers in India.

They're learning from them.

Before you can automate the work, you must understand the work.

What expertise in your org would break everything if the person holding it left tomorrow?